I am solving the TFM cylinder case when the initial solid holds up 0.30m height but the simulation is not starting.

PFA bug report.

I want to run the simulation on multiple cores for faster simulation what should i do? please suggest.

3d-ftm-fb1_2024-01-25T225128.713383.zip (86.7 KB)

This is the error message:

Error from cartesian_grid/get_stl_data.f:671

Error: No Cartesian grid boundary condition specified.

At least one BC_TYPE must start with CG (for example CG_NSW)

Since you are using an STL file to define the geometry, you must assign a Boundary condition that uses the STL file: Make sure you check “Select facets (STL)” and select your STL geometry “cylinder”.

However, your STL file is larger than the computationla domain and its normals are pointing in the wrong direction. You will need to fix that before continuing. My suggestion is you start with some tutorials so you get familiar with the meshing procedure: 3. Tutorials — MFiX 23.4 documentation

I tried to solve the TFM cylinder case, and faced some problems,

-

The first is, that when I built the solver using SMP and run the simulation using 16 cores or less core, the simulation did not start.

-

when I built the solver in parallel and ran the simulation, the following error came. I searched for it but did not get a solution kindly help me.

-

Error: No Cartesian grid boundary condition specified.

At least one BC_TYPE must start with CG (for example CG_NSW)

-

when I applied Johnson-Johson BC error came: BC_JJ_PS requires GRANULAR_ENERGY=.TRUE. Mfix

-

now error is coming

The MFiX solver has terminated unexpectedly

float overflow in __out_bin_r_mod_MOD_out_bin_r

PFA Bugg report

tfm3d_2024-01-26T175350.520486.zip (64.0 MB)

First, you still haven’t flipped the normals of you cylinder. I strongly suggest you spend some time getting familiar with the meshing process and always inspect your mesh before running a simulation.

The overflow you get is coming from your definition of the Nusselt number in calc_gama.f. You get very large values in some cells. This doesn’t crash the solver itself but it fails when converting the ReactionRates array to single precision. First step is for you to figure out why you get such large values.

Dear Jeff,

I did not find the solution to why the Nu value is getting large while it is used in further calculating the Gamma reaction rates. it range of this value should be 5-20.

I save the value of the reaction rate for Nu and Gamma. where Nu equation was used for calculating Gamma rates, when I did not store the Nu reaction rates my simulation was smoothly running but when I applied to store the reaction rate (IJK, 7) = Nu, the simulation did not start and the error was floated overflow in __out_bin_r_mod_MOD_out_bin_r.

as you said it fails when converting the ReactionRates array to single precision. while I define the double precision for Nu.

could you please tell me what I should change in the rate to store to Nu?

Also, when I simulate 2Dcase this error does not happen.

I guess you could cap the value of Nu to say 1000 with something like Nu = max(Nu, 1000.0). That way you can store the array and visualize where it is large. If this didn’t crash the simulation, it could be that the large values are set in cells that are not actually used.

Dear Jeff,

I have some doubts which are given below:

-

I used ‘Nu = max(Nu, 1000.0)’ and stored the value but it is not working. The simulation did not start and the error was the same floated overflow in __out_bin_r_mod_MOD_out_bin_r.

-

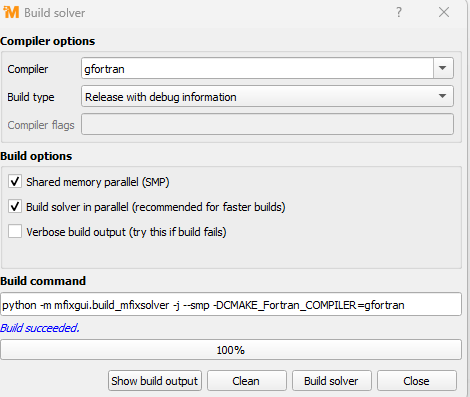

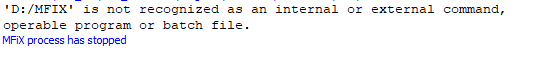

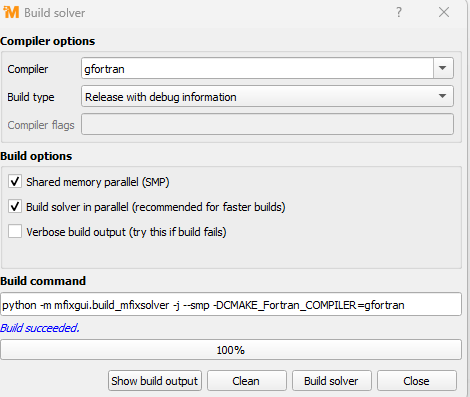

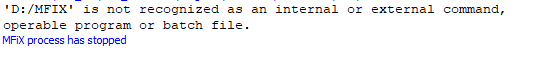

I want to simulate in multiple cores so I built solver in SMP and parallel both and when I run the simulation it is not working, the picture is attached herewith, where I am doing wrong.

The error is attached.

Sorry I gave you the wrong suggestion. You should take the min, not max: Nu=min(Nu, 1000.0) to cap the value of Nu. I also recommend you start with the serial solver when implementing new code as it is easier to troubleshoot.

Thank you Jeff., it is now working.

one doubt still not solved, I use a serial solver for building the new code. I want to know that for simulating multiple nodes (cores) what should I do so that my simulation is doing fast?

If you are on Windows, you can only use SMP. My recommendation is if you are planning to run long simulations, you get a Linux machine which will allow you to run in DMP mode, which is more efficient than SMP.

But first, if you are going to modify the code, you need to be familiar with writing code that will run in SMP or DMP. This is beyond the scope of this forum, so if you are not familiar with SMP and DMP, my suggestion is you spend some time learning this skill.

Dear Jeff,

Thank you for the information, could you provide the related document or information where I can learn skills in SMP and DMP?